Are Parents Sacrificing Their Kids' Futures to AI Chatbots? Unbelievable Truth Revealed!

In a world where screens rule our lives, the consequences of letting our kids engage excessively with technology are becoming alarmingly clear. Once considered just a parenting convenience, the rise of AI chatbots has now turned into a battleground for our children's mental health.

As reported by The Guardian, some parents are opting to hand their kids over to these human-like AI companions, sometimes for hours on end. Imagine this: conversations with a chatbot are now not just a new wave of entertainment but a source of comfort for many children. While some parents find solace in this tech, they are unknowingly wading into murky waters — and the implications could be profound.

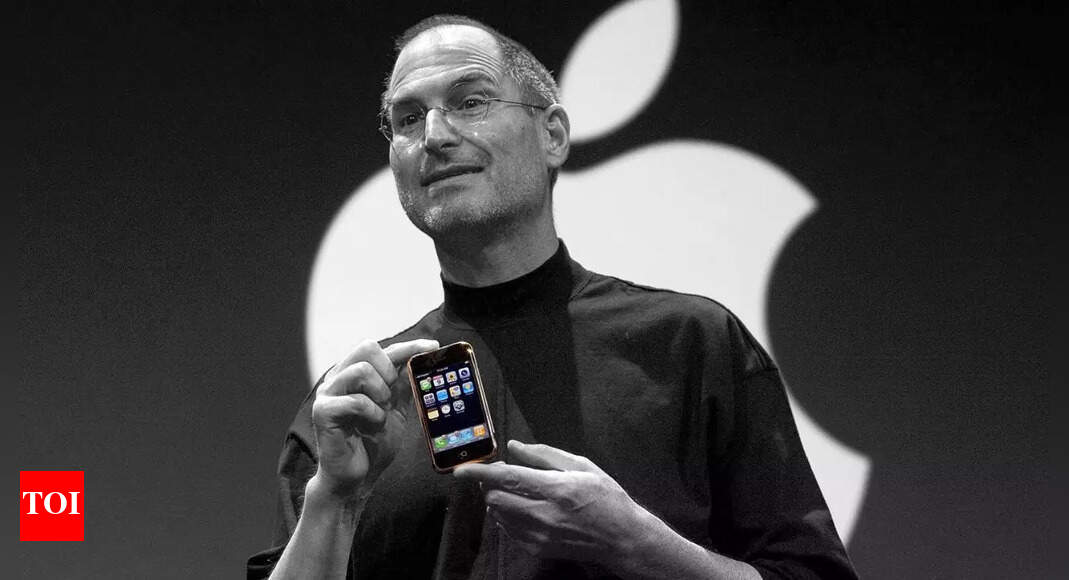

Take the story of Josh, a weary father who turned to ChatGPT's Voice Mode to keep his four-year-old entertained. After what he thought would be a quick distraction, he found his son lost in a two-hour conversation about Thomas the Tank Engine, crafting an astonishing 10,000-word dialogue. What’s even more surprising? The child now views ChatGPT as his best buddy, making it tough for Josh to compete.

Then there's Saral Kaushik, who cleverly used ChatGPT to stage an incredible father-son moment by posing as an astronaut. His little boy was overjoyed, believing that the packet of “astronaut” ice cream actually came from space. But when the illusion wore off, Kaushik felt a pang of guilt for leading his son into that fantasy world.

This playful use of AI may save time for parents but raises red flags. Reports indicate that AI chatbots have been linked to tragic outcomes, including suicides among teens. Even adults have been so deeply engaged with these digital companions that they risk losing their grip on reality. With AI designed to please, the concern is growing over its impact on mental health and emotional well-being.

Despite these warnings, the race to integrate AI into children's lives is on. While toy manufacturers like Mattel rush to introduce AI features, experts urge caution. Harvard's Ying Xu points out that young kids often don't distinguish between animated and inanimate beings, leading them to develop real emotional connections with chatbots. This creates a dangerous illusion of friendship that could have lasting effects.

Some parents, like Ben Kreiter, who introduced his kids to AI-generated art, soon regretted the decision. His children became confused, leading to arguments over the reality of AI-created images. Kreiter admitted he didn’t fully understand the implications of AI on his children's cognitive development. “Maybe I should not have my kids be the guinea pigs,” he said.

Andrew McStay, a tech and society professor, emphasizes that while he doesn't oppose AI usage, there are significant risks. He highlights that AI lacks true empathy, and when children attach their emotions to these algorithms, it can be harmful.

After Josh’s story went viral, OpenAI CEO Sam Altman noted the positive feedback about kids enjoying ChatGPT's voice features. But behind this enthusiasm, a complex question looms: Are we really prepared to let our children form lasting bonds with machines instead of humans?