Unbelievable AI Threat: Microsoft’s Toxic Protein Discovery Exposes Biosecurity Risks!

Ever thought AI could become a weapon? Shocking research has revealed that artificial intelligence can be tricked into helping create dangerous toxic proteins, putting biosecurity at immediate risk! Backed by Microsoft, this alarming study has experts sounding the alarm bells about the implications of AI in biotechnology.

In a recent revelation, Microsoft-backed researchers demonstrated how AI tools can be manipulated to design perilous proteins, raising crucial questions about biosecurity. To combat this frightening potential, Microsoft has introduced a partial “patch” for DNA synthesis systems. However, experts are cautioning that this solution is merely a temporary fix in a much deeper, ongoing biosecurity arms race. Adam Clore, director of technology R&D at Integrated DNA Technologies (IDT) and coauthor of the Microsoft report, stated, “The patch is incomplete, and the state of the art is changing.” He emphasized that this isn’t a one-time solution — rather, it’s the beginning of more extensive testing and adjustments, indicating a worrying trend where safety measures will need constant revision.

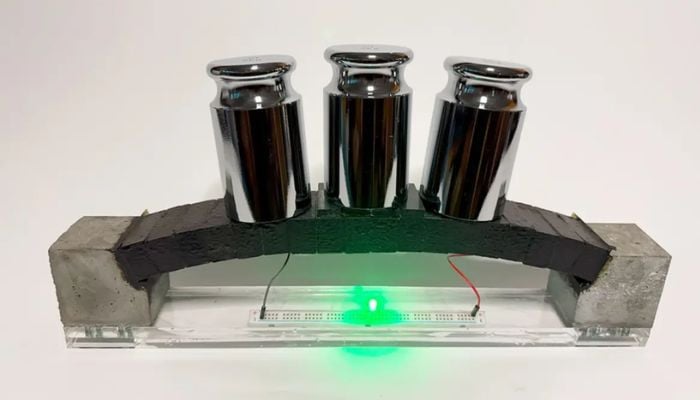

In a bid to prevent misuse, researchers kept key details of their experiments under wraps, including which toxic proteins were evaluated by the AI. However, it's known that proteins like ricin, derived from castor beans, and infectious prions linked to mad-cow disease are significant threats. This brings to light the urgent need for fortified nucleic acid synthesis screening procedures. Dean Ball, a fellow at the Foundation for American Innovation, pointed out that the intersection of AI advancements with biological modeling necessitates enhanced screening protocols accompanied by rigorous enforcement.

DNA order screening is already a cornerstone of U.S. biosecurity, underscored by an executive order signed by President Trump in May 2024 aimed at revamping biological research safety measures. However, critics argue that new guidelines have yet to materialize, leaving gaps in security. Michael Cohen, an AI safety researcher at UC Berkeley, has raised concerns that Microsoft’s patch might not adequately address the real risks. He warns that malicious actors can easily disguise hazardous sequences, potentially bypassing DNA screenings entirely. “The challenge appears weak, and their patched tools fail a lot,” Cohen stated.

Moreover, Cohen advocates for biosecurity protections to be integrated directly into AI systems, limiting the nature of sensitive information that AI can produce, rather than merely relying on DNA manufacturers to act as the gatekeepers. Despite the skepticism surrounding Microsoft’s patch, Clore argues that DNA manufacturing remains a critical line of defense, given that only a handful of companies in the U.S. dominate this sector and maintain close ties with government agencies. In contrast, the technology necessary for operating large AI models is becoming increasingly decentralized and widely available, amplifying the potential for misuse.