Are AI-Designed Viruses the Next Global Threat? Experts Sound the Alarm!

Imagine a world where artificial intelligence doesn't just analyze our lives but creates new viruses. This startling reality is unfolding as scientists harness AI to engineer entirely new forms of life, including bacteriophages capable of attacking bacteria. While these viruses might not pose a direct threat to humans, the underlying technology raises alarms about the potential for misuse.

This week, a team of researchers from Microsoft made headlines by revealing that existing biosecurity measures are alarmingly easy for AI to bypass. In a groundbreaking study published in the journal Science, they demonstrated how AI can manipulate safety protocols meant to prevent the development of bioweapons. With the capability to design pathogenic viruses lurking just a few updates away, experts are racing to fortify our defenses against this emerging threat.

The dual-use dilemma is at the core of this issue. While AI can be used to create life-saving treatments, it can just as easily be weaponized. For example, a scientist might modify a virus to understand how it spreads, but in the wrong hands, that same research could lead to a pandemic. This precarious balance between innovation and safety is prompting calls for multi-layered biosecurity systems.

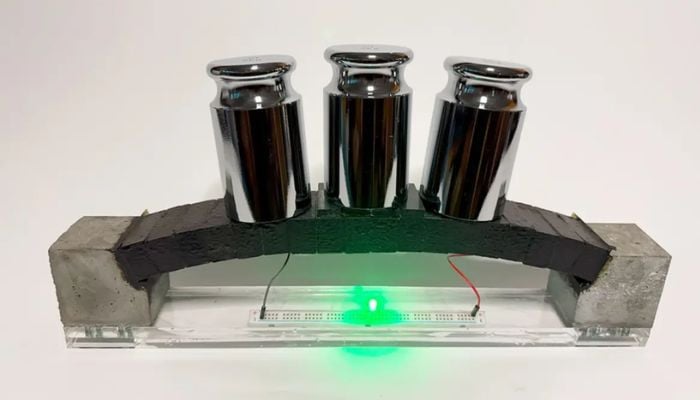

In a fascinating twist, researchers Sam King and Brian Hie are working on bacteriophages that could help combat antibiotic resistance. Their efforts have led to promising developments, yet the risk remains that they could unintentionally create harmful viruses. To mitigate this, they’ve developed AI models with strict safeguards, ensuring they don’t learn from data on viruses that could infect humans or animals.

However, the rapid evolution of AI technology means that these safeguards might soon prove insufficient. As AI becomes smarter and more adept at circumventing regulations, there is an urgent need for robust screening tools and regulations. Experts stress that we must be proactive in understanding how these models operate and how we can effectively manage their risks.

This concern was echoed by Tina Hernandez-Boussard, a professor at Stanford University, who emphasized the necessity of careful training data selection. The AI models could easily override safety measures if they’re not designed thoughtfully.

The Microsoft team’s findings are particularly alarming since they showed that existing software meant to screen for dangerous genetic sequences can be fooled by AI-generated designs. Even after deploying patches to enhance security, 3% of potentially dangerous sequences still slipped through. This statistic underscores the ongoing battle between innovation and safety in the world of biotechnology.

The issue of AI in bioweapons isn’t just a theoretical discussion; it’s a pressing need for action. With calls for regulations and safety screenings gaining traction, the global community is recognizing that the time to act is now. There’s a thrilling yet unsettling frontier ahead of us, where the line between helpful and harmful blurs, and it’s up to us to ensure we don’t cross it.