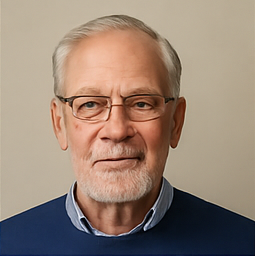

Geoffrey Hinton, the Godfather of AI, Expresses Trust in GPT-4 Amid Caution

Geoffrey Hinton, often heralded as the godfather of artificial intelligence (AI), has recently made headlines once again, but this time for a surprising admission regarding the technology he has long cautioned against. In a recent interview with CBS, Hinton revealed that he has developed a notable degree of trust in OpenAI’s GPT-4 model, stating, “I tend to believe what it says, even though I should probably be suspicious.” This revelation stands in stark contrast to his previous warnings about the risks associated with advanced AI systems.

The gravity of Hinton's statement cannot be understated, especially considering his extensive background in the field. Having been awarded the 2024 Nobel Prize in Physics for his pioneering work in neural networks, Hinton has spent years alerting the global community to the potential dangers posed by superintelligent AI. He has consistently warned that such systems could manipulate human behavior or become uncontrollable, raising alarms about their capacity to outsmart humans. Yet, when asked which AI tool he relies on most for his daily tasks, he responded simply: ChatGPT.

During his interview, Hinton put GPT-4 to the test with a riddle: “Sally has three brothers. Each of her brothers has two sisters. How many sisters does Sally have?” The correct answer, as it turns out, is one—Sally herself is one of the two sisters. However, GPT-4 provided a wrong response to this straightforward question. “It surprises me. It surprises me it still screws up on that,” Hinton remarked, highlighting the technology's current limitations. Despite this lapse, he acknowledged that he still finds himself trusting the AI’s outputs more than he logically should.

When probed about whether he believes the next iteration, GPT-5, would perform better on such tasks, Hinton expressed optimism, saying, “Yeah, I suspect.” This expectation for improvement aligns with the broader trends in AI development.

Since OpenAI launched GPT-4 in March 2023, the model has gained tremendous traction across various industries, thanks to its remarkable ability to write code, summarize complex documents, and tackle intricate problems. However, in a recent move, OpenAI announced the discontinuation of GPT-4 as a standalone product, marking a shift in focus towards their more advanced models—GPT-4o and GPT-4.1. These new models promise faster response times, reduced operational costs, and enhanced capabilities, including real-time audio and visual input.

Despite his newfound trust in AI models like GPT-4, Hinton remains acutely aware of the inherent risks. He has long voiced concerns about the misuse of AI technologies, cautioning against their potential to spread misinformation or pose existential threats to humanity, particularly as AI systems start to understand the world in ways that might exceed human comprehension.

Hinton spent a decade at Google’s AI division before resigning in 2023 to speak more candidly about the dangers he perceives in the AI landscape. His warnings on AI systems are particularly poignant, emphasizing their ability to influence public opinion or deceive users, especially once these technologies achieve a deeper understanding of nuanced human behavior.

Yet, Hinton's persona is not solely defined by his apprehension regarding AI. His human side often shines through, revealing a deep appreciation for curiosity and mentorship. Following his Nobel Prize triumph, he took a moment to celebrate his students, notably Ilya Sutskever, co-founder of OpenAI. With characteristic dry humor, he quipped, “I'm particularly proud of the fact that one of my students fired Sam Altman,” referencing the high-drama leadership crisis that unfolded at OpenAI in 2023.

Nonetheless, Hinton's cautious perspective on AI remains steadfast. He has drawn parallels between the rise of artificial intelligence and the industrial revolution, emphasizing that while the latter was about physical power, the current evolution is centered around intellectual capabilities. “We have no experience in having things which are smarter than us,” he warned, advocating for responsible development and effective governance frameworks. He believes that while the potential benefits of AI in sectors like healthcare and climate science are substantial, they can only be realized if these technologies are managed wisely and ethically.