Latest News

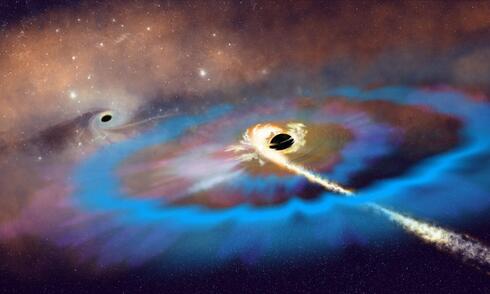

Unbelievable Discovery: Black Hole Tears Apart Star, Sends Out Record-Breaking Radio Signals!

In a jaw-dropping discovery, astronomers have detected powerful radio signals from a black hole tearing apart a star in a tidal disruption event, far from the c ...

Unbelievable Hack at Canadian Airports Broadcasts Pro-Hamas Message!

In a shocking cyberattack, several Canadian airports fell victim to hackers who displayed a pro-Hamas message on flight information screens, causing delays and ...

Unbelievable Discovery: Toxic Lead Shaped Human Evolution for 2 Million Years!

Did you know that lead, a toxic metal, has been influencing human evolution for over two million years? A groundbreaking study reveals that exposure to lead was ...

YouTube Outage Shuts Down Millions: What You Need to Know Now!

This morning, YouTube faced a major outage that left millions globally without access to videos, causing widespread frustration and revealing our reliance on th ...

Tragic Titanic Expedition: Faulty Engineering Behind Submersible Disaster Revealed!

In a shocking revelation, the National Transportation Safety Board concluded that faulty engineering was to blame for the Titan submersible disaster that killed ...

Unbelievable: Engineering Flaws Led to Titanic Submersible Tragedy!

Shocking revelations from the NTSB expose engineering flaws as the cause of the Titan submersible's catastrophic implosion, killing five individuals during a di ...

AI's Flirty Future: Will ChatGPT Open the Door to Dangerous Erotica?

OpenAI is preparing to introduce sexually explicit content to ChatGPT, raising alarm bells among critics like the National Center on Sexual Exploitation. They w ...

Unbelievable Discovery: Microbial Life That Could Thrive on Mars Found in Israel!

Researchers from Ben-Gurion University and NASA have found resilient microbial life in Israel’s Timna Valley, potentially redefining the boundaries of life on ...

Discovering a Hidden Volcano Fault: A Shocking AI Breakthrough!

A shocking study reveals that the Campi Flegrei volcano near Naples has a hidden ring fault exposed by AI technology, which has detected over 54,000 earthquakes ...

Abandoned Puppy Overcomes Tragedy to Become a Turbo Rocket Star in Spain!

What if I told you a puppy named Candy went from a dangerous life in the Thai jungle to becoming a lively star in Spain? Rescued by the team at Happy Doggo, Can ...

Judge Slams Quebec Man for Using AI Hallucinations in Court: The Shocking Truth!

In a stunning legal twist, a Quebec man has been fined C$5,000 for submitting AI-generated fabrications as court evidence. Jean Laprade's attempts to use artifi ...

The Shocking Truth Behind the Internet's Favorite 'Rat Hole' Revealed!

In a surprising turn of events, the viral 'Chicago Rat Hole'—thought to be a rat impression—has been identified as the result of a squirrel's jump gone wron ...

Revolutionary AI-Powered EagleEye System: The Future of Military Technology Revealed!

The EagleEye system, developed by Anduril Industries, introduces a groundbreaking AI-powered technology aimed at enhancing situational awareness for military fo ...

Unbelievable Discovery: Dark Matter Could Color Light Red or Blue!

In a stunning revelation, researchers from the University of York have discovered that dark matter may subtly color light, potentially manifesting as red or blu ...

Unbelievable Discovery: Did Life's Building Blocks Arrive from Space?

Did you know that life’s building blocks might have arrived from space? New research shows that amino acids could survive the cosmic journey on interstellar d ...

Pixel 10 Pro Fold's Shocking Durability Test: Catches Fire?!

What happens when a highly anticipated smartphone bursts into flames during a durability test? That's exactly what YouTuber Zack Nelson discovered with Google's ...

NASA's GUARDIAN Could Give You Life-Saving Minutes Before a Tsunami Hits!

What if you could get advance warning about a tsunami, giving you precious minutes to escape? NASA's innovative GUARDIAN system is doing just that, detecting ts ...

China’s Shocking WTO Complaint: Is India’s EV Subsidy Scheme Unfair?

China's recent complaint to the WTO challenges India's subsidies for electric vehicles, claiming they give Indian industries an unfair advantage. With China dom ...

Ancient Dinosaur Superhighway Discovered: Shocking Footprints Found in UK Quarry!

The discovery of a massive dinosaur trackway in the Oxfordshire quarry has unearthed one of the world’s longest known dinosaur highways, stretching 220 meters ...

The Moon Economy: Are We Ready to Cash In on Lunar Resources?

The Moon economy is on the horizon as international space agencies prepare for the Artemis II mission in 2026. This ambitious venture aims to establish a perman ...

The End of an Era: What Will Happen When the ISS Falls from the Sky?

As the International Space Station prepares for retirement in 2030, its legacy of over 25 years of continuous human presence in space will be hard to match. Thi ...

Unbelievable Battery Explosion: Is the Google Pixel 10 Pro Fold Safe?

The shocking explosion of the Google Pixel 10 Pro Fold's battery during a durability test by JerryRigEverything has raised serious safety concerns. While some d ...

Is Earth’s Orbit Becoming a Dangerous Junkyard? Shocking Truth Revealed!

Is Earth’s orbit on the brink of becoming a hazardous junkyard? With over 1.2 million pieces of debris circulating our planet, experts are ringing alarm bells ...

Amazon's Shocking Layoff Plans: 15% of HR Jobs to Disappear Amid AI Shift!

Amazon is set to lay off 15% of its HR staff as part of a major strategic shift towards automation. This move comes as the company invests heavily in AI technol ...

China's Trade Threats: Are Allies Like India Ready to Support the U.S.?

In the face of rising trade tensions, U.S. Treasury Secretary Scott Bessent declared a battle between China and the world, emphasizing the need for allied suppo ...

Unbelievable Impact: US Government Shutdown Threatens Science and Health!

The ongoing US government shutdown is wreaking havoc on science and public health, leading to massive layoffs and funding cuts. With critical programs like the ...

Shocking Currency Shifts: Why a Weaker Dollar Could Change Everything!

Did you know the U.S. dollar is plunging to multi-month lows as global currencies rise? Fed Chair Jerome Powell's hints at rate cuts have reshaped the market la ...

Shocking Discovery: Chicago's Viral 'Rat Hole' Is Actually a Squirrel's Creation!

Think you know everything about Chicago's viral 'Rat Hole'? Think again! A new study reveals that this infamous sidewalk imprint, which thousands flocked to vis ...

Unbelievable Breakthrough: Aussie Scientists Battle Decades to Transform Cancer Treatment!

Prepare to be inspired by the incredible journey of Dr. Jennifer MacDiarmid and Dr. Himanshu Brahmbhatt, two Australian scientists making waves in the fight aga ...

Unbelievable $15 Billion Scam: Romance, Forced Labor, and a Mysterious Kingpin!

In a jaw-dropping revelation, federal prosecutors have seized a staggering $15 billion from Chen Zhi, the mastermind behind a massive scam that exploited victim ...

Unbelievable Irony: U.S. Wants India’s Help While Slapping Tariffs on Their Goods!

In a bizarre twist of diplomacy, the U.S. is demanding support from India against China's dominance in the rare earth market while simultaneously enforcing stee ...

Google's Foldable Phone EXPLODES in Shocking Durability Test!

In a shocking turn of events, Google's Pixel 10 Pro Fold has failed dramatically in a durability test, exploding during testing. YouTuber JerryRigEverything hig ...

Unbelievable Discovery: Explosive Volcanic Eruptions Might Have Hidden Ice on Mars!

Did you know that explosive volcanic eruptions may have hidden ice in Mars's equatorial regions? A recent study in Nature Communications suggests that these pas ...

Tens of Billions Stolen: Massive Crackdown on Global Scam Empire Unveiled!

Authorities have launched a groundbreaking offensive against a vast criminal organization linked to a staggering $15 billion in scams, revealing a dark web of h ...

YouTube's Shocking Auto-Dubbing Feature: Are You Ready for Lip-Synced Content in Your Language?

YouTube is revolutionizing how we engage with video content through its innovative auto-dubbing feature, which offers lip-synced translations in multiple langua ...

Flat Earth Believers Shocked! A Simple Experiment Proves Our World is Round

Flat Earth theorists are facing a major blow as a simple Reddit experiment reveals the Earth’s roundness. A stunning time-lapse captures the analemma, a figur ...

Jerome Powell’s Shocking Warning: Is the Job Market in Danger?

Jerome Powell’s recent speech at the NABE conference has raised alarms about the precarious state of the US job market amid rising inflation risks. He emphasi ...

Shocking Discovery: Can Yeast Survive Mars-Like Conditions?

Can yeast survive the Martian environment? A fascinating study reveals that Saccharomyces cerevisiae can endure extreme conditions resembling those on Mars, tha ...

China's Shocking New Rules on Rare Earth Exports Could Cripple Global Tech!

In a stunning turn of events, China has tightened export restrictions on rare earth minerals vital for electric vehicles and technology, causing chaos in the U. ...

News by Category

Unbelievable Hack at Canadian Airports Broadcasts Pro-Hamas Message!

In a shocking cyberattack, several Canadian airports fell victim to hackers who displayed a pro-Hamas message on flight information screens, causing delays and ...

YouTube Outage Shuts Down Millions: What You Need to Know Now!

This morning, YouTube faced a major outage that left millions globally without access to videos, causing widespread frustration and revealing our reliance on th ...

Tragic Titanic Expedition: Faulty Engineering Behind Submersible Disaster Revealed!

In a shocking revelation, the National Transportation Safety Board concluded that faulty engineering was to blame for the Titan submersible disaster that killed ...

Unbelievable Discovery: Trees Actually Hold Secrets to Hidden Gold!

Can you believe that trees might harbor gold? This unbelievable discovery from Finland reveals that Norway spruce trees can contain microscopic gold specks in t ...

Unbelievable Discovery: Black Hole Tears Apart Star, Sends Out Record-Breaking Radio Signals!

In a jaw-dropping discovery, astronomers have detected powerful radio signals from a black hole tearing apart a star in a tidal disruption event, far from the c ...

Unbelievable Discovery: Toxic Lead Shaped Human Evolution for 2 Million Years!

Did you know that lead, a toxic metal, has been influencing human evolution for over two million years? A groundbreaking study reveals that exposure to lead was ...

Pixel 10 Pro Fold's Shocking Durability Test: Catches Fire?!

What happens when a highly anticipated smartphone bursts into flames during a durability test? That's exactly what YouTuber Zack Nelson discovered with Google's ...

The Moon Economy: Are We Ready to Cash In on Lunar Resources?

The Moon economy is on the horizon as international space agencies prepare for the Artemis II mission in 2026. This ambitious venture aims to establish a perman ...

Unbelievable Battery Explosion: Is the Google Pixel 10 Pro Fold Safe?

The shocking explosion of the Google Pixel 10 Pro Fold's battery during a durability test by JerryRigEverything has raised serious safety concerns. While some d ...