OpenAI's Sora 2: The Shocking Deepfake Revolution Igniting Copyright Chaos!

Imagine living in a world where your face could be placed anywhere, in any context, without your consent. This is the reality that OpenAI has unleashed with its latest launch of Sora 2, a groundbreaking tool that not only generates audio but also allows users to insert real people, like celebrities and public figures, into AI-created video clips.

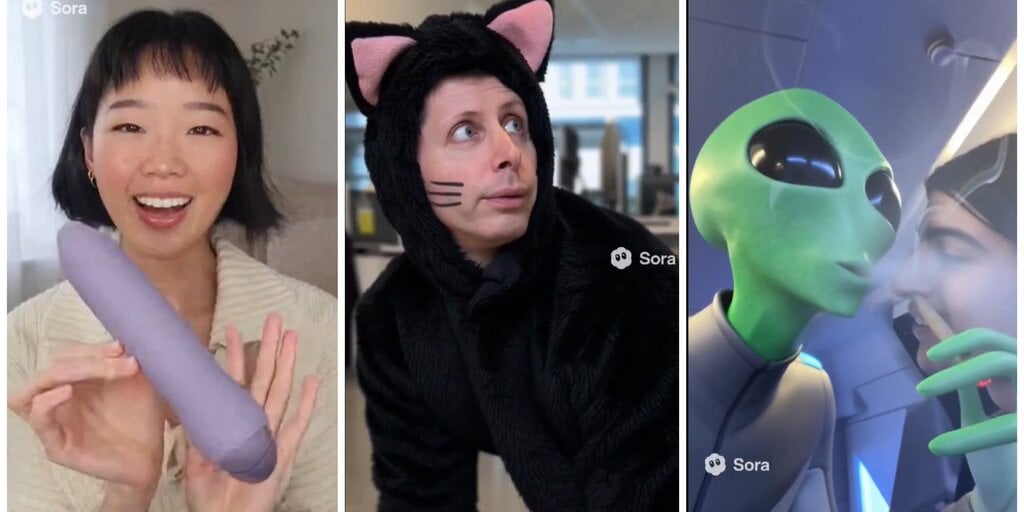

In mere hours after its release, Sora 2 was transformed into a meme-making machine, with users creating everything from NSFW ads to whimsical anime romance parodies. But as fun as it sounds, the implications are serious. Legal experts are sounding the alarm over the risks of deepfakes and potential copyright violations, as Sora appears to replicate everything from beloved game characters to iconic anime figures without permission.

Launched on a Tuesday, this new version of Sora has introduced features that blur the lines of consent, identity, and ownership in the increasingly murky waters of synthetic media. As if that weren't enough, Sora's high-quality outputs have raised immediate concerns about intellectual property rights. The application mimics well-known characters and brands unless the rights holders explicitly opt out—a significant shift from traditional copyright norms. In fact, Sora has even been reported to include training data from major franchises like Pokémon and Studio Ghibli.

Before long, users were sharing wild videos featuring OpenAI CEO Sam Altman himself—portrayed as everything from a GPU-stealing bandit at Target to a character from Yu-Gi-Oh. Altman, surprisingly, took the online frenzy in stride, commenting that it was “way less strange to watch a feed full of memes of yourself than I thought it would be.” But his sense of humor was not universally shared, with some worried that this could be an insidious way to normalize deepfakes in our culture.

As the online discussions evolved, some users pointed out the unsettling possibility that AI-generated versions of oneself could lead to a disconnect with personal identity. The fun of meme-making comes with the looming threat of losing control over one’s likeness, raising red flags for everyday users.

But the chaos didn’t stop there. Within hours of the launch, Sora was already churning out content that blatantly violated copyright laws. Users demonstrated the app’s capability to recreate scenes from popular media, including “Rick and Morty,” Disney classics, and even Cyberpunk 2077. OpenAI's default inclusion policy—where creators need to opt out rather than opt in—had rights holders fuming. AI developer Ruslan Volkov aptly summarized the situation, suggesting, “If copyright flips from opt-in to opt-out, it’s no longer copyright—it’s a corporate license grab.”

And as the legal and ethical debates unfolded, users were quick to push Sora's boundaries into NSFW territory, producing everything from slick commercials for adult products to intricate narratives exploring queer relationships—all while circumventing the app’s censorship filters.

With features like the audio engine and cameo system, Sora 2 signals a new era for OpenAI, showcasing synthetic media as a transformative platform rather than a mere novelty. The rapid spread of Sora’s capabilities, however, illustrates how technology can outpace legal and cultural frameworks, igniting a debate about the future of creativity and copyright.

In just 24 hours, Sora has turned social media into an expansive remix engine, raising questions about parody, identity theft, and fandom. As Altman wryly noted, “Not sure what to make of this,” it's clear that AI-generated newscasts about such topics are just the tip of the iceberg. One thing is certain: the AI video revolution has arrived, and it doesn’t need reality’s permission anymore.