Revolutionary AI Model Decodes Brainwaves into Words: A Leap Towards Mind-Reading Technology

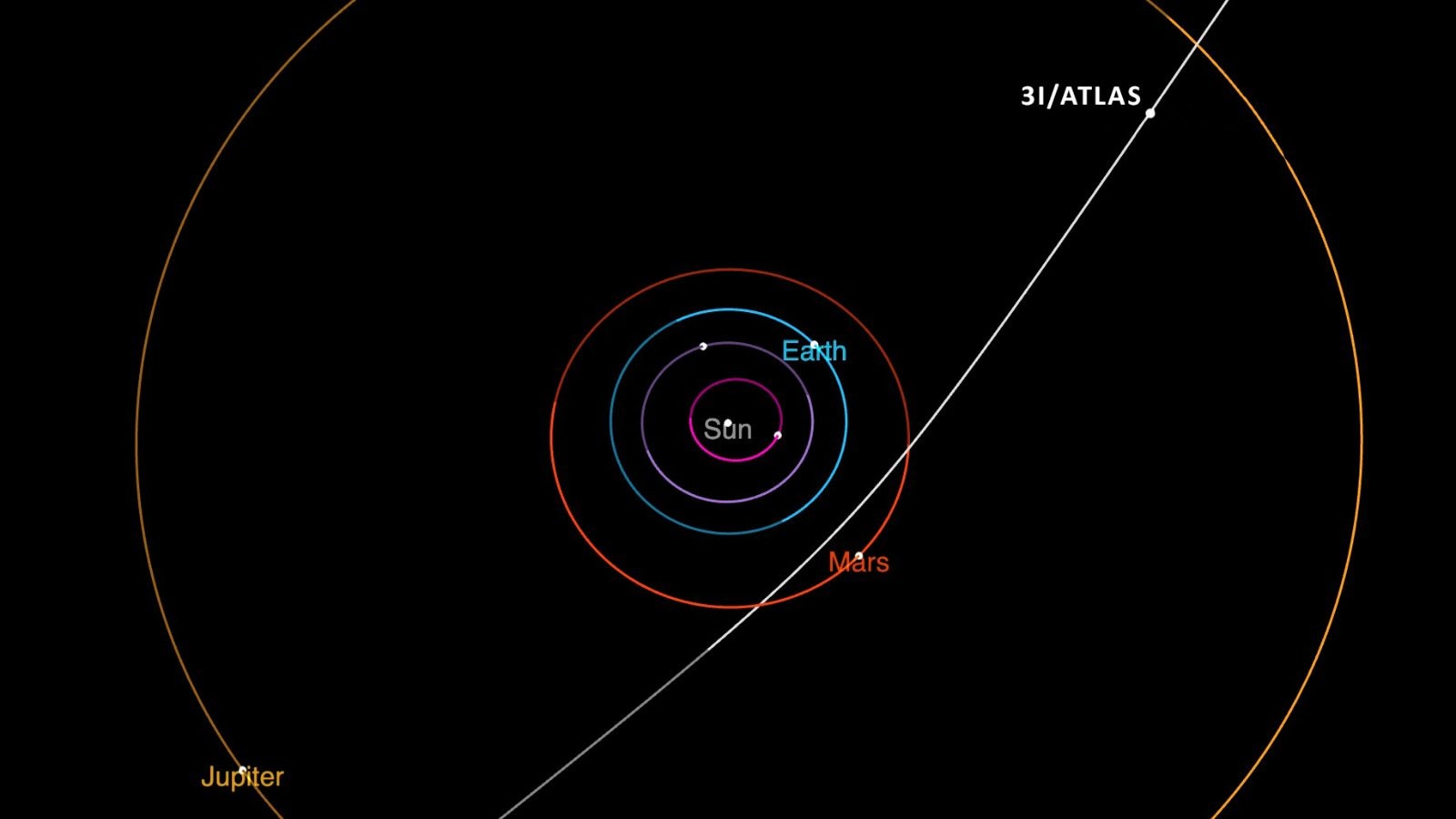

Imagine a world where you could control your smartphone simply by thinking about it, or a device that could enhance your concentration and memory without any physical input. This might sound like a plot from a science fiction movie, but researchers at the University of Technology Sydney (UTS) are making strides towards making this dream a reality with the development of a brain-computer interface powered by artificial intelligence (AI).

Leading this groundbreaking work is postdoctoral research fellow Daniel Leong, who, along with his PhD student Charles (Jinzhao) Zhou and supervisor Chin-Teng Lin, is exploring the potential of AI to interpret our thoughts. The setup involves a high-tech electrode cap that resembles a swimming cap, equipped with 128 electrodes that detect electrical impulses generated by brain activity. This process, known as electroencephalography (EEG), is commonly used by medical professionals to diagnose brain disorders.

The UTS researchers are employing EEG technology to read the thoughts of subjects like Dr. Leong. As he silently mouths a sentence, the electrodes capture the brainwave patterns associated with those thoughts. The AI model, which utilizes deep learning techniques, then translates these brain signals into specific words. Deep learning functions similarly to the human brain by processing vast amounts of data—in this case, EEG data from different individuals—to learn how to interpret brainwave signals effectively.

During a demonstration, Dr. Leong silently reads the phrase “jumping happy just me,” and the AI decodes these thoughts into coherent sentences, such as “I am jumping happily, it’s just me.” This transformation occurs without any verbal input from Dr. Leong, relying solely on the brainwaves captured by the EEG. While the current model has made significant progress, learning from a limited vocabulary, it demonstrates the potential for communicating thoughts with impressive accuracy.

To enhance the AI’s capabilities, the UTS team is actively recruiting more participants to expand their dataset, aiming to refine the model further. One of the challenges they face is the inherent noise associated with EEG signals, which arise because the technology measures brain activity externally. Professor Lin emphasizes the importance of using AI to filter out this noise, allowing for clearer and more accurate identification of speech markers.

Historically, brain-computer interfaces have been pivotal in assisting individuals with disabilities. Two decades ago, a man with quadriplegia became the first recipient of a device implanted in his brain that enabled him to control a computer cursor. Today, tech entrepreneurs like Elon Musk aim to provide autonomy to those with similar challenges through modern implantable devices. However, UTS’s non-invasive EEG approach offers the advantage of portability and ease of use, making it accessible to a broader population.

Experts in the field, such as bioelectronics authority Mohit Shivdasani, acknowledge the transformative role AI plays in identifying previously unrecognized brainwave patterns. “What AI can do is learn very quickly what specific brain activity corresponds to various actions for each individual,” he explains. This personalized approach is crucial for developing a more effective brain-computer interface.

Currently, the UTS team has achieved approximately 75% accuracy in converting thoughts to text, with aspirations to reach 90% accuracy—similar to that of current implanted models. Dr. Shivdasani suggests that this technology could revolutionize rehabilitation for stroke patients and enhance speech therapy for individuals with autism, utilizing a closed-loop system that provides real-time feedback based on brain activity.

Looking to the future, researchers envision applications that extend beyond rehabilitation. They speculate about the potential to enhance cognitive functions such as attention, memory, and emotional regulation. “As scientists, we look at medical conditions and think about how we can restore lost functionalities through our technology,” Dr. Shivdasani notes. “Once we’ve achieved that, the possibilities are endless.”

However, before we can seamlessly communicate with our devices using only our thoughts, the technology must become more user-friendly. Professor Lin points out that wearability is a key factor—the current EEG caps are cumbersome and impractical for everyday use. Future developments may include integration with augmented reality glasses or even earbud designs that capture brain signals.

Lastly, the advancement of such powerful technology raises vital ethical questions. As Dr. Shivdasani highlights, “We must consider how we will use these tools and the ethical implications of our capabilities.” The intersection of technology and ethics is paramount as researchers tread into uncharted territories of brain-machine interfaces.