Shocking AI Tool Lets You Create Nonconsensual Deepfakes of Celebrities – Here’s What You Need to Know!

Imagine a world where you can create digital replicas of your favorite celebrities, complete with outrageous scenarios, all with a few clicks. Welcome to the unsettling reality of Grok Imagine. On Tuesday, Elon Musk’s xAI launched an image and video generator that’s raising eyebrows and igniting debates about consent and ethics in the age of artificial intelligence. This tool, which boasts a ‘spicy’ mode, allows users to conjure images that can range from suggestive to explicit, effectively blurring the lines of morality, legality, and privacy.

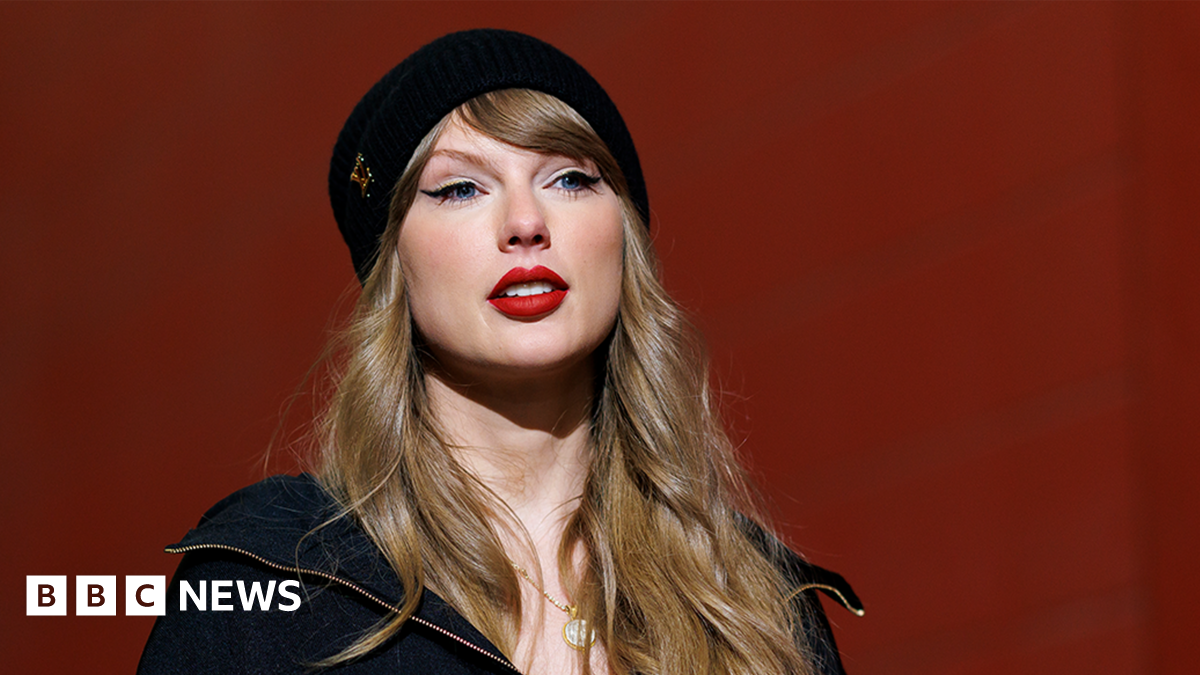

What’s alarming is that while teenage girls struggle to find reliable information about periods on platforms like Reddit, this AI tool offers anything but a wholesome digital experience for just $30 a month. That’s right, for the price of a couple of lattes, you could potentially generate nonconsensual deepfakes of A-listers like Taylor Swift. And it’s not just a theoretical discussion—Musk himself gleefully announced that over 34 million images were generated within just a day of the launch, showcasing a staggering demand for this kind of content.

While the internet grapples with efforts to sanitize content and restrict access to sexual material, here comes Grok Imagine, flaunting its capabilities. It’s launching at a time when platforms like Steam and Itch.io are under pressure to remove adult games and content, particularly with newly enforced regulations in the UK aimed at protecting users under 18. In stark contrast, xAI seems to be waving a flag of rebellion against these restrictions, showcasing an uncanny ability to sidestep the emerging rules surrounding online adult content.

Deepfake pornography is classified as nonconsensual intimate imagery, which is illegal in the U.S. due to the recently enacted Take It Down Act. The Rape, Abuse & Incest National Network (RAINN) has publicly condemned Grok’s new feature, labeling it “part of a growing problem of image-based sexual abuse,” and pointedly noting that Grok appears to be operating as if it hasn’t heard about this law.

Legal experts like Mary Anne Franks, a professor and president of the Cyber Civil Rights Initiative, have weighed in, suggesting that Grok may be shielded from liability under the current regulations. Why? The law requires ‘publication’ of content, implying that the material must be accessible to the public. If Grok restricts these generated videos to the user alone, it complicates the legal landscape significantly. Franks points out that Grok might not even qualify as a ‘covered platform’ under the law’s definitions, thus escaping the scrutiny that other platforms face.

This loophole in regulations reflects a broader trend of inadequate enforcement against tech giants like Musk’s companies. While smaller platforms are pressured to adhere to stringent content guidelines, companies backed by vast resources and political influence often evade accountability. It’s frustratingly ironic that as xAI flouts these emerging laws, smaller services struggle to operate under their weight.

The deeper implications of Grok’s launch cannot be overlooked. It starkly illustrates the ongoing disconnect between the promises of a safer internet and the reality we inhabit. While independent platforms are forced to censor consensual content, a billionaire’s company profits from creating potentially damaging materials that tread a fine line of legality. As we navigate this new digital frontier, one thing becomes clear—the rules don’t apply equally, and in 2025, discussions about sex are unlikely to focus on the act itself but rather on the power dynamics that govern it.