Congress Passes Take It Down Act to Combat Nonconsensual Intimate Images

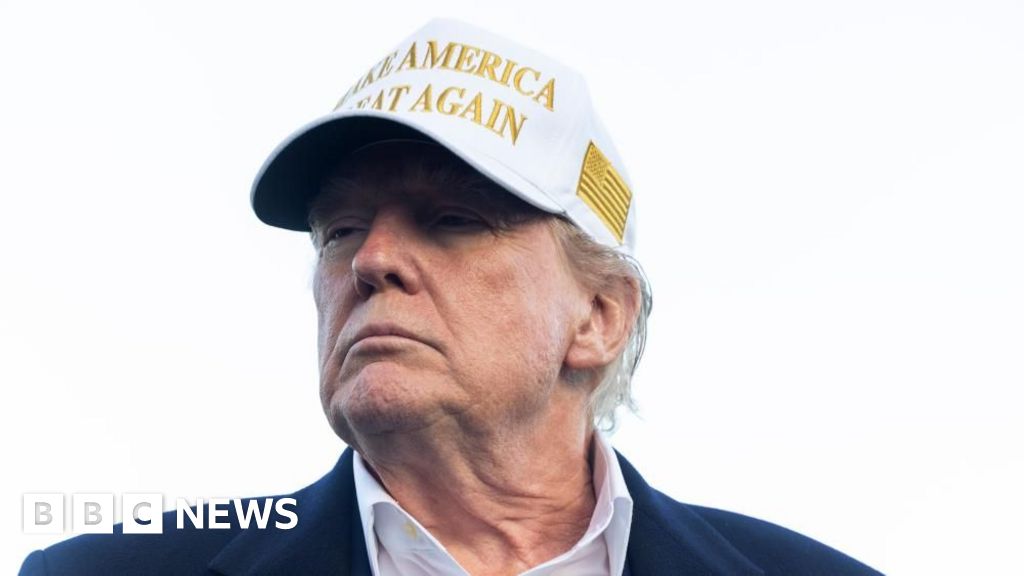

In a significant advancement for digital rights and protections, the U.S. federal government has taken a pivotal step forward, albeit a cautious one, in addressing the rampant issue of nonconsensual intimate images online. On Monday, Congress made headlines by nearly unanimously passing the Take It Down Act, a bill aimed squarely at combating the distribution of such images, commonly referred to as 'revenge porn' and AI-generated deepfakes. With President Trump indicating his readiness to sign the bill into law, digital rights advocates are raising alarms about potential pitfalls in its language and the lack of crucial safeguards against misuse.

The Take It Down Act was introduced by Senators Ted Cruz and Amy Klobuchar in 2024. This legislation criminalizes the unauthorized distribution of nonconsensual intimate images (NCII) and mandates that specific online platforms implement processes to report and remove offending content within a strict 48-hour window following notification. This swift removal is designed to protect victims from further trauma and to hold offenders accountable for their actions.

The bill has witnessed an unusual level of bipartisan support, garnering endorsements not only from legislators across the aisle but also from members of Trumps inner circle. First Lady Melania Trump, for instance, hosted a White House roundtable discussion on the matter in March, signifying the administrations commitment to addressing the issue. During a joint session of Congress, Trump expressed his eagerness to sign the bill, stating, Im going to use that bill for myself too if you dont mind because nobody gets treated worse than I do online, nobody.

The progression of the bill through Congress has been meteoric. It received unanimous approval from the Senate and subsequently passed the House with an overwhelming 409-2 vote. Senator Cruz hailed this as a historic win, highlighting the bills potential to make a difference in the lives of victims. In a statement, he emphasized the importance of prompt action by social media companies to take down abusive content, thereby sparing victims from repeated trauma.

Senator Klobuchar echoed Cruzs sentiments, stressing that these images can ruin lives and reputations. She noted that the new law will empower victims to have their nonconsensual images removed from social media platforms while enabling law enforcement to pursue those who perpetrate such violations.

Despite the positive sentiment surrounding the legislation, experts caution that while the Take It Down Act is a step in the right direction, it may not go far enough to protect all victims. Many states already have laws in place that prohibit nonconsensual pornography; however, these laws often fail to adequately shield a growing number of victims, particularly women, who report higher rates of victimization. A 2019 study revealed that one in twelve participants had experienced such victimization at least once in their lifetime. Moreover, the rise of artificial intelligence technologies has complicated matters further, as these tools can generate both adult and child content, leaving states grappling with how to define and regulate AI-generated deepfakes.

While the Take It Down Act appears to offer a solid framework for addressing these critical issues, not everyone is convinced. On the same day of its passage, the Cyber Civil Rights Initiative (CCRI), a group dedicated to combating nonconsensual images, expressed several concerns. They noted that the takedown provisions outlined in the bill are highly susceptible to misuse and will likely be counter-productive for victims. One of the main criticisms revolves around the absence of safeguards against fraudulent complaints, which could enable individuals to exploit the law for ulterior motives, such as removing legitimate content.

Furthermore, the Electronic Frontier Foundation (EFF) also weighed in with skepticism, warning that the reliance on automated filters to enforce the law may lead to significant overreach. These blunt tools could result in innocent content being removed without proper checks, especially given the laws stringent timeframe. As a result, platforms may choose to eliminate content preemptively rather than risk potential legal repercussions.

Such concerns are not baseless. Proponents of the bill argue that it has the potential to provide substantial protections, but historical precedents suggest otherwise. For instance, the Digital Millennium Copyright Act (DMCA) was inundated with over thirty thousand false notices between June 2019 and January 2020, highlighting the risks of misuse that can accompany broad copyright laws. YouTubes infamous takedown first, ask questions later policy serves as a cautionary example of how well-intentioned legislation can be wielded to hinder free expression.

As the Take It Down Act approaches the President's desk, additional legislation such as the DEFIANCE Act is also in the pipeline. This proposed law would give deepfake victims the right to sue those who create, share, or receive such images, potentially enhancing the protections established by the Take It Down Act. Yet, as Nick Garcia, Senior Policy Counsel at Public Knowledge, aptly summarized, This was a chance to get it right, but unfortunately, Congress only got it half rightand half right laws can do real damage.