Meta's Mind-Reading Watch: Control Devices with Your Thoughts!

Imagine a world where you can control your computer without lifting a finger—or even by just thinking about it! Researchers at Meta have made incredible strides towards that reality with a groundbreaking wristwatch-style tool that can interact with devices using hand gestures, or even the power of thought.

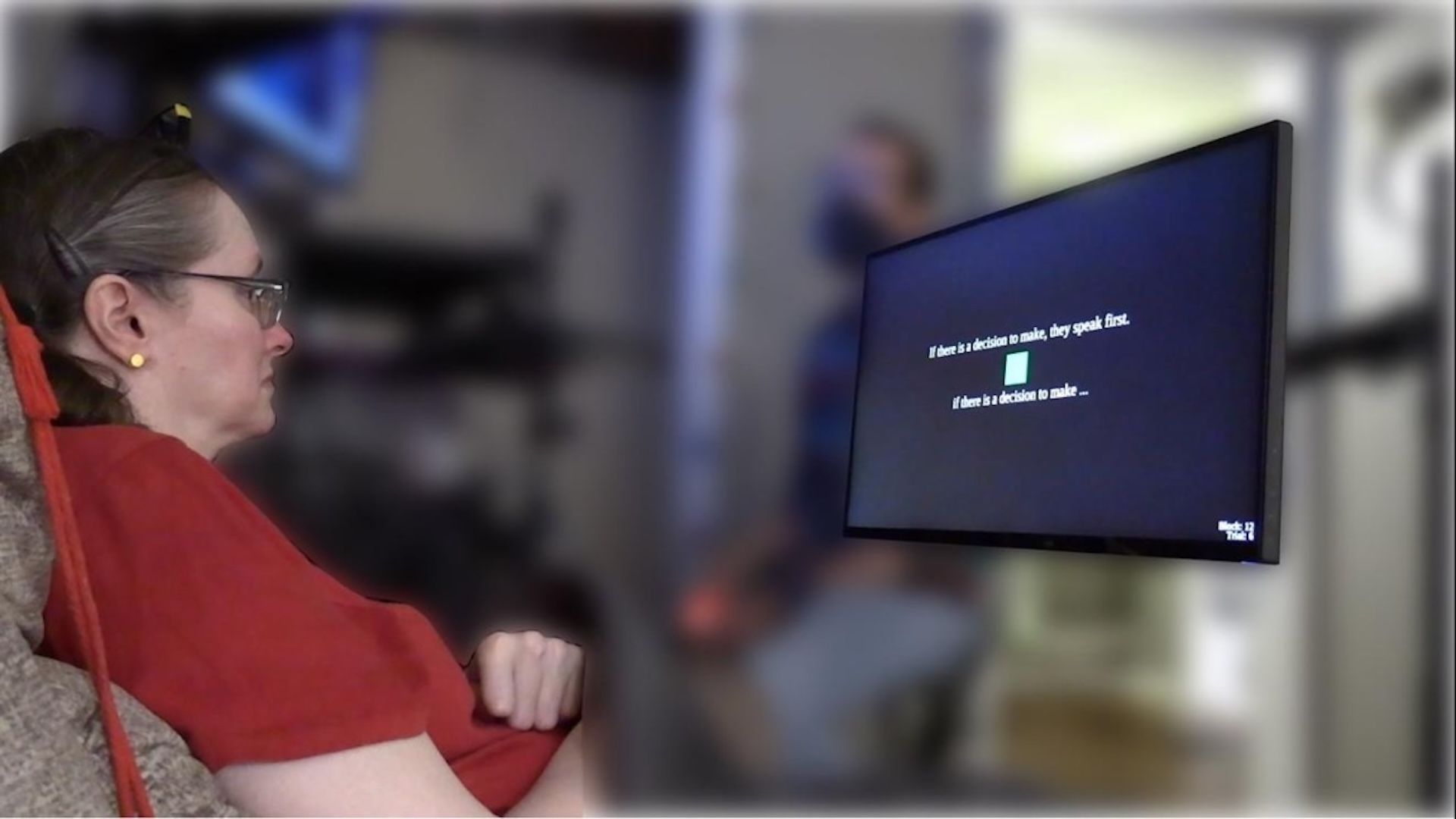

In a recent blog post, Meta unveiled this innovative Bluetooth device, which allows users to control their computers while keeping their hands comfortably at their sides. Sounds like magic, right? But it’s not just about moving a cursor around; users can actually type messages by “writing” letters in the air!

What’s the secret sauce behind this futuristic gadget? It operates using a technology known as surface electromyography (sEMG), a non-invasive method that tracks electrical activity in muscles. This means it can pick up on subtle movements and intentions without any invasive procedures.

Meta believes that leveraging sEMG at the wrist could lead to a paradigm shift in human-computer interaction (HCI). Their blog post enthusiastically mentioned, “Based on our findings, we believe that surface electromyography (sEMG) at the wrist is the key to unlocking the next paradigm shift in human-computer interaction.” This is more than just tech jargon; it represents a giant leap towards seamless interaction between humans and machines.

In tandem with this announcement, Meta published a research paper in the prestigious Nature journal, which delves deeper into the technology behind the device. They described it as a “generic non-invasive neuromotor interface” that decodes computer inputs via sEMG. Sounds heavy, but it’s essentially a fancy way of saying that it translates your muscle signals into commands.

Thanks to advancements in machine learning and AI, this breakthrough is becoming a reality. According to Meta researchers, their neural networks have been trained on data from thousands of participants, making them incredibly accurate at interpreting subtle gestures from a diverse range of users. Think about that for a moment: your computer could understand what you want to do, even before you physically do it!

As Thomas Reardon, one of the authors of the research, revealed in an interview with the New York Times, you don’t even need to move—just intend to make the move. This opens up a world of possibilities for those who face mobility challenges, making computers more accessible than ever before. Unlike invasive technologies like Neuralink that require direct brain implants, Meta’s device is refreshingly less invasive.

However, there’s a catch: Meta’s blog post didn’t disclose any details about the device’s name, pricing, or a release date. It feels more like a science experiment than a product ready for mass consumption. But who knows? As technology continues to evolve, we might just find ourselves tapping into the future sooner than we think!