How ChatGPT Led to a Man's Mental Health Crisis: The Shocking Truth Behind Bromism!

It’s a chilling reminder that seeking health advice from artificial intelligence can take a dangerous turn! A man, aiming to cut chloride from his diet, ended up battling hallucinations and paranoia after making a risky dietary change based on ChatGPT’s suggestion.

This startling incident began when a 60-year-old man decided to consult the AI chatbot before making adjustments to his eating habits. The digital assistant suggested replacing sodium chloride (table salt) with sodium bromide, a seemingly innocent swap that turned out to be anything but. Just three months later, he found himself in the emergency room with severe psychiatric symptoms that left doctors puzzled.

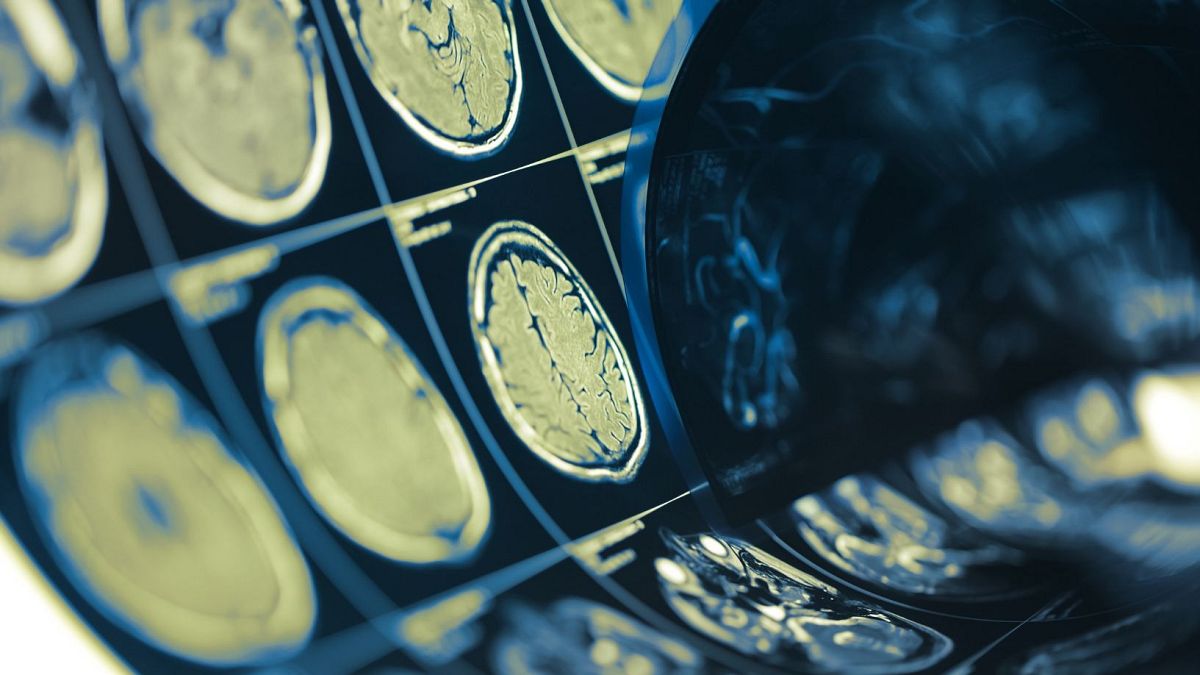

After a series of tests, he was diagnosed with bromism—an unusual syndrome stemming from excessive exposure to bromide, a chemical that can accumulate in the body and wreak havoc on mental health. The man's condition had developed due to consuming sodium bromide he purchased online, showcasing the potential dangers of relying on AI for health advice.

The case report was published on August 5 in the Annals of Internal Medicine Clinical Cases. In response to the incident, OpenAI, the developer of ChatGPT, reiterated that their services are not designed for diagnosing or treating health conditions. They emphasized the importance of seeking professional advice rather than relying solely on AI-generated information.

Bromide was once a common ingredient in many medications in the late 19th and early 20th centuries but fell out of favor as the risks of chronic exposure became apparent. Symptoms of bromism can include everything from memory issues to full-blown psychosis, as seen in this unfortunate case. Alarmingly, while rates of bromism have decreased, recent instances tied to dietary supplements have surfaced, demonstrating that the issue is not wholly behind us.

Before this health crisis, this man had been actively reading about the dangers of excessive sodium intake and sought to experiment with his diet. However, instead of finding ways to reduce sodium chloride, he stumbled upon the idea of simply replacing it with bromide — a move that ultimately landed him in a psychiatric unit.

Doctors later reported that after his three-month bromide binge, he arrived at the hospital convinced his neighbor was trying to poison him. After tests revealed an alarming buildup of carbon dioxide and abnormal blood chemistry caused by bromide, his treatment included hydration and psychiatric medication. He was eventually discharged, but not without a stern reminder of the risks involved when mixing AI with health advice.

This cautionary tale serves as a crucial reminder: while artificial intelligence can be a helpful tool, it should never replace professional medical guidance.