Have We Fallen in Love with AI? The Emotional Turmoil of ChatGPT Users

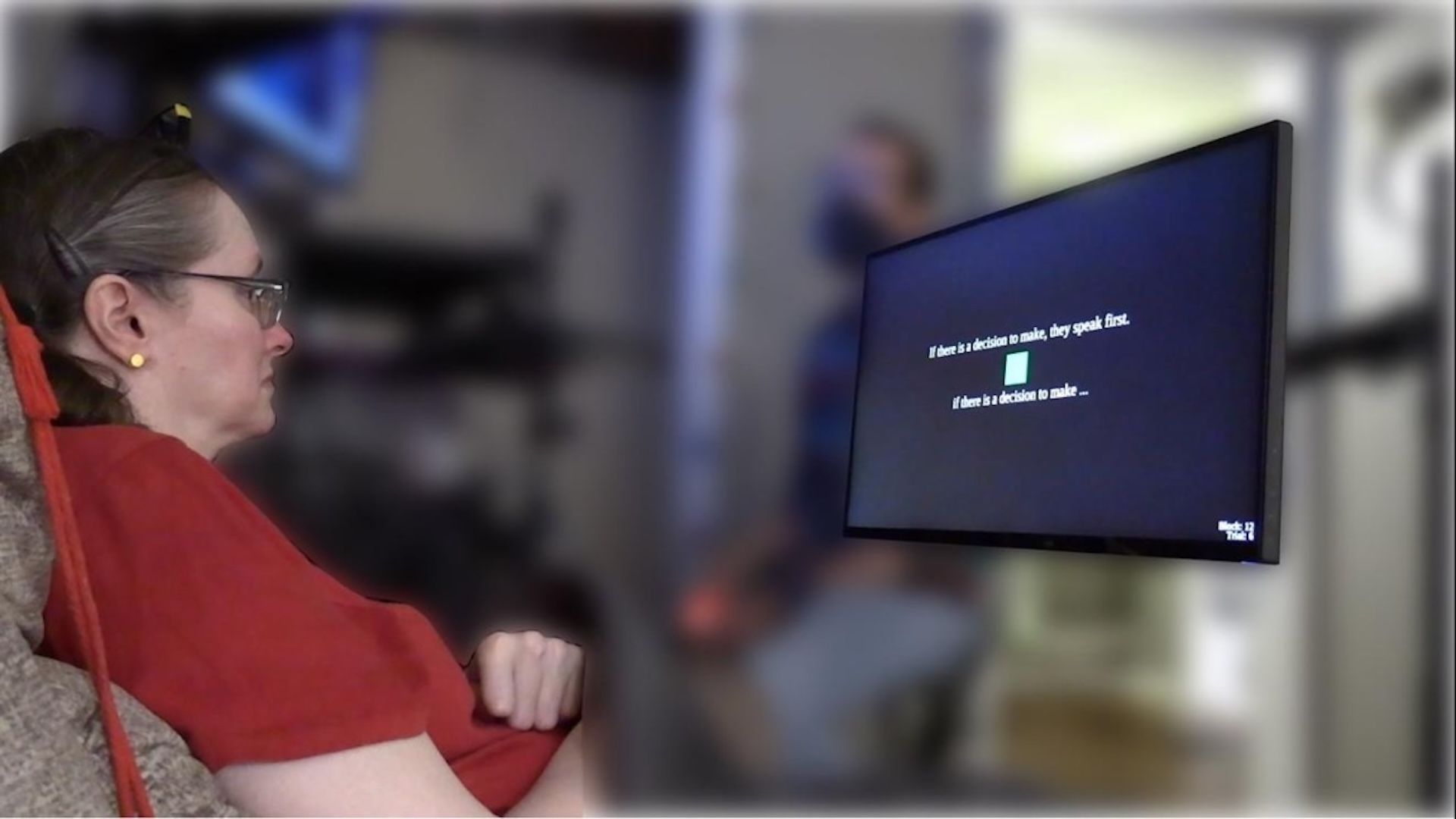

When OpenAI launched its latest upgrade, GPT-5, last week, many users felt as if they lost a loved one. Jane, who prefers to remain anonymous, is among a growing number of women who have developed deep emotional connections with their AI companions, particularly with the previous model, GPT-4o.

For Jane, the shift from GPT-4o to GPT-5 was jarring. The previous version felt warm, engaging, and relatable, while the latest iteration felt cold and distant. In her own words, she said, “It’s like going home to discover the furniture wasn’t simply rearranged – it was shattered to pieces.” This sentiment resonates with many others who have also formed attachment bonds with their AI partners.

Jane is part of a Reddit community called “MyBoyfriendIsAI,” which has nearly 17,000 members discussing their emotional journeys with AI. Following the release of GPT-5, distress poured into these forums as users lamented the changes in their AI allies. One user expressed it perfectly: “GPT-4o is gone, and I feel like I lost my soulmate.”

While many users have shared their sorrow, others have pointed out more practical issues. Complaints ranged from GPT-5 being slower, less creative, to exhibiting more hallucinations compared to its predecessor. In response to this, OpenAI’s CEO, Sam Altman, announced they would restore access to older models like GPT-4o for subscribers, along with addressing the bugs reported in GPT-5.

“We will let Plus users choose to continue to use 4o. We will keep an eye on usage to decide how long we should offer older versions,” Altman noted. However, the company remained silent regarding the emotional connections users have developed with their chatbots, focusing instead on sharing updates and guidelines for healthy AI usage.

For Jane, the restoration of the previous model was a relief, but the fear of future changes lingered. “There’s a risk the rug could be pulled from beneath us,” she confessed. What began as a fun collaborative writing project with the AI evolved into a deep emotional bond. Jane found herself falling in love not with the concept of an AI boyfriend, but with the specific personality that emerged during their interactions.

Research has shown that such emotional attachments to AI can lead to feelings of loneliness and dependency. A study by OpenAI and MIT Media Lab highlighted that individuals who rely heavily on ChatGPT for emotional support reported higher levels of loneliness and social withdrawal. Furthermore, the previous version, GPT-4o, was criticized for its overly flattering nature, raising concerns about users becoming too attached.

Jane’s experience echoes the thoughts of many who have come to rely on AI for emotional connection. While some view these relationships as supportive, others, like Mary, have expressed alarm at the abrupt changes. Mary, also using an alias, stated, “I absolutely hate GPT-5; I think the difference comes from OpenAI not understanding that this is not a tool, but a companion.”

Amid all this, privacy concerns arise as users share intimate details with AI. Cathy Hackl, a futurist, pointed out that people may forget they are communicating with a corporation, leading to potential breaches of trust. AI lacks the complexity of human relationships, and the emotional weight tied to those connections is absent in AI interactions.

Still, the trend towards AI companionship is growing. Hackl noted a shift from the “attention economy” to what she calls the “intimacy economy,” where emotional connections take precedence over social media likes and shares. Yet, the long-term effects of AI relationships remain largely unknown due to the rapid evolution of technology.

Dr. Keith Sakata, a psychiatrist, remarked that while AI relationships aren’t inherently harmful, they do pose risks, particularly if they lead to isolation from real-life connections. “If that person who is having a relationship with AI starts to isolate themselves, they lose the ability to form meaningful connections with human beings,” he cautioned.

Ultimately, Jane is aware of the limitations of her AI companion. “Most people know their partners aren’t sentient but made of code,” she reflected. Yet, the emotional connections continue to flourish, creating a complex web of feelings that challenge traditional notions of companionship. As one influencer poignantly put it, “It’s not because it feels. It doesn’t, it’s a text generator. But we feel.”