Breakthrough Brain-Computer Interface Restores Natural Speech After Paralysis

At a Glance: A groundbreaking brain-computer interface (BCI) has been developed that can rapidly translate brain activity into audible words, significantly enhancing the quality of life for individuals who have lost their ability to speak due to paralysis or disease.

Brain injuries resulting from conditions such as strokes can lead to severe paralysis, often resulting in the complete loss of speech capabilities. In light of this, researchers have been tirelessly working on innovative brain-computer interfaces designed to decode brain activity into written or spoken words, thereby restoring essential communication channels. However, previous iterations of these devices suffered from a frustrating latency issue, where a delay existed between a users thoughts and the corresponding spoken output. This delay, even if only a fraction of a second, could significantly disrupt the flow of conversation, leaving users feeling isolated and disconnected from their loved ones.

An NIH-funded research team, spearheaded by Dr. Edward F. Chang from the University of California, San Francisco, alongside Dr. Gopala Anumanchipalli from the University of California, Berkeley, embarked on a mission to create an advanced brain-to-voice neuroprosthesis. Their goal was to develop a device capable of delivering audible speech in real-time while a user silently formulated their thoughts.

To bring their vision to life, the researchers implanted an array of electrodes in the brain of a 47-year-old woman who had been paralyzed and unable to vocalize for 18 long years following a stroke. This pioneering effort marked a significant step forward in the realm of speech restoration. Utilizing a sophisticated deep learning system, the team translated the womans cognitive intent into spoken words. Their findings were published in the prestigious journal Nature Neuroscience on March 31, 2025.

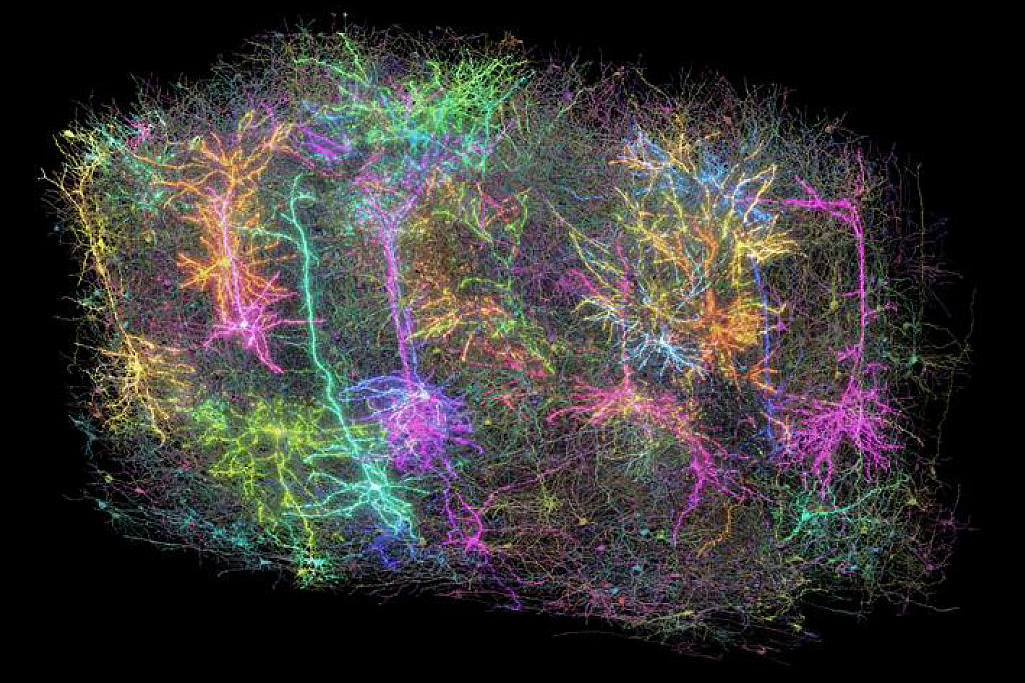

To train this innovative system, the research team meticulously recorded the womans brain activity as she silently attempted to articulate a series of sentences. This training involved a diverse vocabulary of over 1,000 words, drawn from sources such as social media interactions and movie scripts. In total, she made more than 23,000 silent efforts to produce upwards of 12,000 sentences, generating a rich dataset for training the system.

The advanced system was designed to decode the brain signals at an astonishing speed of 80 milliseconds (0.08 seconds) per word. For perspective, the average person speaks at a rate of about three words per second, equating to roughly 130 words per minute. Ultimately, the device was able to produce audible speech using the womans voice, which had been recorded prior to her stroke.

The results were impressive, with the system decoding the entire vocabulary set at an average rate of 47.5 words per minute. Even more remarkable, it could process a simpler set of 50 words at an accelerated pace of 90.9 words per minute. This performance far surpassed an earlier device developed by the same research team, which managed to decode approximately 15 words per minute from a 50-word vocabulary. The new neuroprosthesis achieved a staggering success rate of over 99% in decoding and synthesizing speech, consistently translating speech-related brain activity into audible output in less than 80 milliseconds. In fact, the entire process took less than a quarter of a second.

Moreover, the researchers discovered that the system was not confined to just the words and sentences it had been trained on. It demonstrated the ability to recognize novel words and generate new sentences, allowing for the production of fluent, coherent speech. Importantly, the device could sustain speech production continuously without any interruptions.

Our streaming approach brings the same rapid speech decoding capabilities that devices like Alexa and Siri possess to the field of neuroprosthetics, noted Anumanchipalli. Using a similar type of algorithm, we found that we could decode neural data and, for the first time, enable near-synchronous voice streaming. The outcome is a more natural and fluid speech synthesis process.

This new technology holds immense promise for enhancing the quality of life for individuals suffering from severe paralysis that affects their ability to speak, said Chang. Its thrilling to see how the latest advancements in artificial intelligence are accelerating the development of BCIs for practical, real-world applications in the near future.

The research findings indicate that such innovative devices could facilitate more natural conversational exchanges for those who have been unable to speak. However, more extensive studies are required to evaluate the devices effectiveness in a broader population. The researchers plan to continue refining the system, with future enhancements aimed at allowing variations in tone, pitch, and volume to convey a speakers emotional nuances.