Revolutionary Brain-Computer Interface Could Restore Voices for Those with Speech Loss

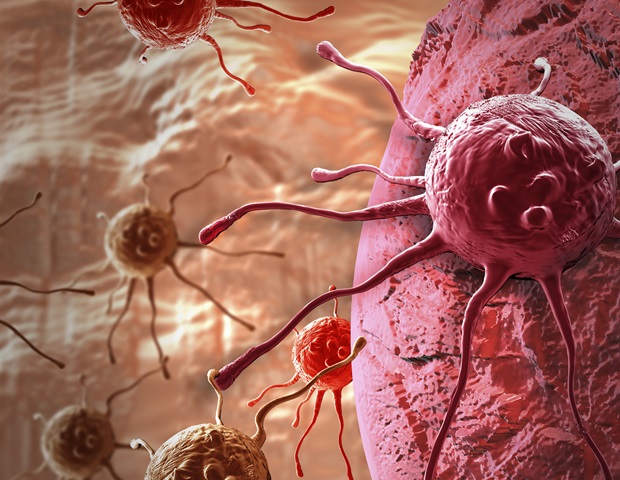

In a remarkable advancement in the realm of medical science and technology, researchers at the University of California, Davis, have developed a groundbreaking investigational brain-computer interface (BCI) that holds the potential to restore the voices of individuals who have lost their ability to speak. This innovative technology has shown promising results by enabling a person to communicate in real-time through synthesized speech generated directly from their brain activity.

The findings of this groundbreaking study were published in the esteemed journal Nature, detailing how a participant suffering from amyotrophic lateral sclerosis (ALS) managed to engage in conversation with his family using a computer that translated his neural signals into voice. This technology is revolutionary as it not only allows for spoken communication but also incorporates the natural nuances of speech, including intonation and even the ability to sing simple melodies.

Sergey Stavisky, the senior author of the study, emphasized the significance of this new approach, stating, “Translating neural activity into text, which is how our previous speech brain-computer interface works, is akin to text messaging. It’s a big improvement compared to standard assistive technologies, but it still leads to delayed conversation. By comparison, this new real-time voice synthesis is more like a voice call.” This analogy highlights the transformative nature of the technology, which aims to enhance the quality of communication for those affected by speech impairments.

The investigational BCI was utilized during the BrainGate2 clinical trial at UC Davis Health, where it involves the surgical implantation of four microelectrode arrays into the brain region responsible for speech production. During the trial, researchers collected data while the participant attempted to articulate sentences displayed on a computer screen. This sophisticated setup is designed to capture intricate brain activities and translate them into spoken words.

According to Maitreyee Wairagkar, the first author of the study, a significant challenge in real-time voice synthesis has been the difficulty in accurately determining when and how an individual with speech loss attempts to speak. However, the newly developed algorithms are capable of mapping neural activity to intended sounds with precision at each moment. Wairagkar explained, “This makes it possible to synthesize nuances in speech and gives the participant control over the cadence of his BCI-voice.”

The system demonstrated an impressive response time, translating the participant's neural signals into audible speech within a rapid one-fortieth of a second. Researchers noted that this delay is comparable to the natural lag a person experiences when speaking and hearing their voice simultaneously, showcasing the technology's effectiveness in mimicking natural conversation.

Moreover, the brain-computer interface allowed the ALS participant to utter new words not previously recognized by the system, as well as make spontaneous interjections. The participant could even adjust the intonation of the computer-generated voice to pose questions or emphasize specific words in a sentence, further enhancing the richness of communication.

The success of this innovative BCI is heavily reliant on advanced artificial intelligence algorithms that facilitate the instantaneous translation of brain activity into synthesized speech. However, the researchers caution that while the findings are encouraging, the brain-to-voice neuroprostheses remain in their early developmental phase. A critical limitation noted in the study is that the research was conducted with only one participant suffering from ALS, underscoring the importance of replicating these results across a broader participant base to validate the findings.